What are COPPA App Guidelines and Why Do They Matter?

By Quan Nguyen

May 19, 2021

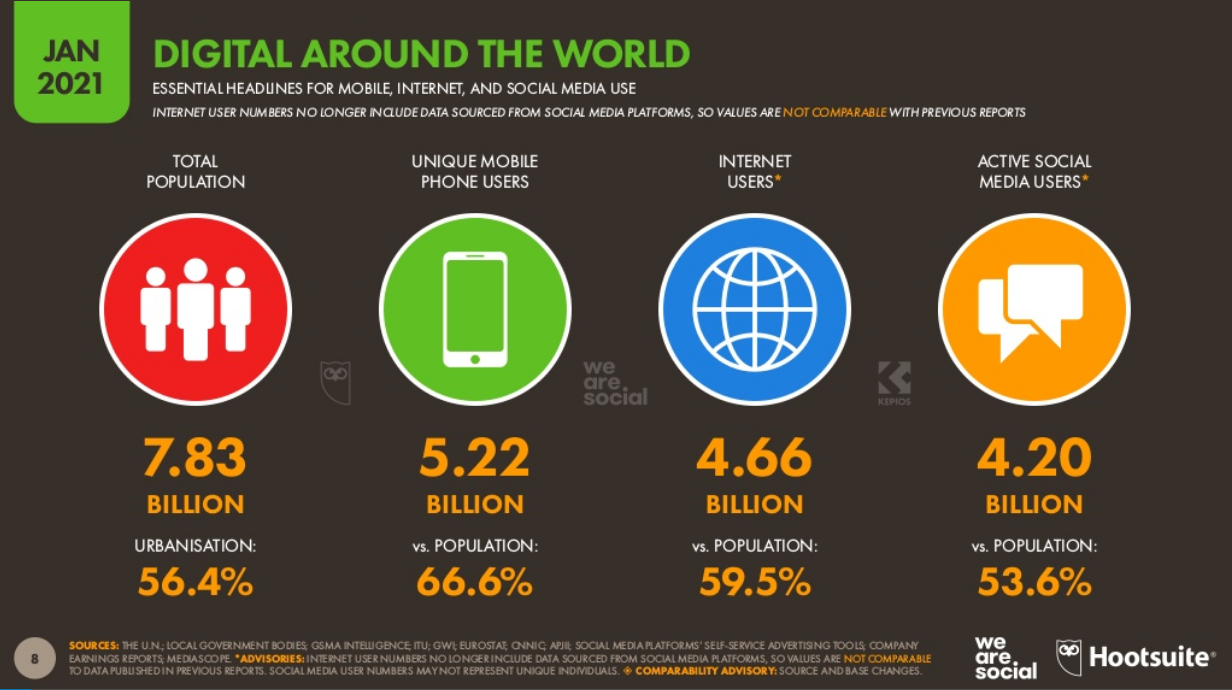

While we stand at the peak of technology, applications on computers and smart devices threaten children’s privacy and security. With the mass production and distribution of digital devices to most of the world’s population, the internet is becoming increasingly accessible. 66% of the world’s population has access to smartphones and more than half of the child population in 2019 had smartphones; experts expect both numbers to continue rising. To combat threats against children online, the United States Congress created the Children’s Online Privacy Protection Act (COPPA) and guidelines for businesses to comply with.

What are COPPA and COPPA App Guidelines?

In short, COPPA is a law established in 1988 to enforce regulation on businesses that direct content toward children (defined as under the age of 13 in the USA). Operators (people or businesses maintaining and collecting information via websites) are responsible for following COPPA guidelines to avoid financial penalties.

COPPA app guidelines are procedures that outline how operators must tailor their websites and processes to protect young users. This law intends to protect children’s privacy by limiting information distributed to children and information collected. Enforced practices include receiving parental consent to collect and distribute PII (Personally Identifiable Information), retaining data only during the user’s interaction period, and prominently disclosing the company’s privacy policy. The guidelines extend into preventing personalized ads and access to communication/comment features on internet platforms. The Federal Trade Commission (FTC) strictly enforces these guidelines with severe penalties potentially reaching millions of US dollars for violation thereof.

Why Do COPPA App Guidelines Matter?

These guidelines are crucial to mitigating corrupt and malicious businesses from receiving and selling information on children to advertisers. COPPA deters marketers from launching misleading campaigns and collection methods such as tracking cookies that violate children’s privacy. In addition to removing existing PII, COPPA gives parents control over what operators do with their child’s data. Parents can consent to or refuse data collection and thus lessen the risk of a child leaking information about themselves. Because of immaturity and lack of experience, younger audiences are likely to endanger their privacy on the web, sometimes unknowingly. Many have argued that the age protected by COPPA needs to be raised since those 13 and older can also make irrational decisions.

While COPPA provides app guidelines that drive away manipulative practices, there are still concerns and ambiguities surrounding those guidelines. How is it determined if a site is directed toward children? What constitutes data collection? Sites like YouTube offer their own guide for determining if content is “made for kids,” but ultimately are not concrete as there is too much variability. Additionally, children can create pseudo-birth dates and screen names that hide their identity and allow them to access sites not directed at children.

Incident Cases

An incident in 2019 regarding Google’s COPPA violation drastically altered the process for content creators and viewers. To put the severity of this law into perspective, the FTC and state of New York claimed a staggering $170 million from Google and YouTube for violating COPPA guidelines. Due to information that YouTube collected from children without parental consent, content creators are now required to disclose if their content is for children. Similarly, the FTC forced three dating apps off the market on Google and Apple app stores since 12 year-olds could access them. These cases exemplify that COPPA does not tolerate threats to child privacy and large-scale punishments will ensue.

Solutions

What options are available to implement toward child privacy? U.S. Representatives Tim Walberg and Bobby Rush created an “eraser button” bill that made progress in the last year and was introduced in March 2021. Dubbed the Preventing Real Online Threats Endangering Children Today, or the PROTECT Kids Act, the bill hopes to allow parents to completely delete material about their children upon request. The PROTECT Kids Act also pushes to increase the age of COPPA protection to 16.

For parents, many businesses are offering an “email plus” option where parents grant consent through their email. Parents are notified when operators make privacy policy changes and can withdraw consent at any time. For operators, the Toy Industry Association has released a “Do’s and Don’ts” guide to complying with COPPA.

To provide clients with the highest level of privacy and security, companies should also implement humanID. humanID creates a unique identifier through SMS. The encrypted identifier is irreversible, and we erase any personally identifiable information. This process eliminates the risk of providing more information than necessary when using Single Sign-On services or other log-in methods. Note that humanID is not an alternative to following COPPA guidelines; the service does not satisfy FTC requirements for child privacy, but it does protect all clients against the collection of personal user data. Ultimately, humanID users benefit from anonymity and shielding from deceptive privacy policies or malicious operators.

Conclusions

Computer and mobile applications today are convenient in terms of accessibility, but consequently enable excessive data collection on minors. The FTC seeks to mitigate these privacy risks by enforcing COPPA where operators must follow guidelines. Operators continue attempts at collecting child data but often face fierce legal actions. As lawmakers take child privacy seriously, parents should also take precautions by monitoring children’s online behavior and offering consent to reasonable policies. In the meantime, operators should carefully adhere to COPPA guidelines and implement additional security measures such as humanID, providing their users with the utmost protection.